Difference between revisions of "Weighted Least Squares (WLS)"

(→Basics) |

|||

| Line 17: | Line 17: | ||

In MM we use SVD. | In MM we use SVD. | ||

| + | |||

| + | Example of trivial MLS is Kernel approximation, which is basically MLS with basis b=1. | ||

| + | \[\begin{array}{l} | ||

| + | \hat u({\bf{p}}) = {{\bf{b}}^{\rm{T}}}{\left( {{{\bf{W}}^{0.5}}{\bf{B}}} \right)^{ - 1}}{{\bf{W}}^{0.5}}{\bf{u}} = 1\left( {\frac{{{{\bf{W}}^{0.5\,}}^T}}{{\left\| {{{\bf{W}}^{0.5}}} \right\|}}} \right){{\bf{W}}^{0.5}}{\bf{u}} = \frac{{\sum {{W_n}{u_n}} }}{{\sum {{W_n}} }}\\ | ||

| + | {\bf{b}} = 1,\,{\bf{W}} = \left[ {\begin{array}{*{20}{c}} | ||

| + | {{{\rm{W}}_1}}&0&0\\ | ||

| + | 0&{...}&0\\ | ||

| + | 0&0&{{{\rm{W}}_n}} | ||

| + | \end{array}} \right],{\bf{B}} = \left[ {\begin{array}{*{20}{c}} | ||

| + | 1\\ | ||

| + | 1\\ | ||

| + | 1 | ||

| + | \end{array}} \right] | ||

| + | \end{array}\]] | ||

| + | This approximation is also well-known as Shephard's method and it is widely used in SPH. | ||

== Normal equations == | == Normal equations == | ||

Revision as of 17:59, 20 October 2016

One of the most important building blocks of the meshless methods is the Moving Least Squares approximation, which is implemented in the EngineMLS class. Check EngineMLS unit tests for examples https://gitlab.com/e62Lab/e62numcodes/blob/master/test/mls_test.cpp

Basics

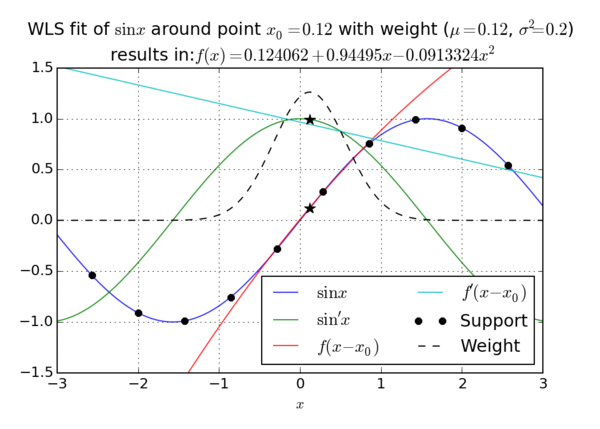

In general, approximation function can be written as \[\hat u({\bf{p}}) = \sum\limits_i^m {{\alpha _i}{b_i}({\bf{p}})} = {{\bf{b}}^{\rm{T}}}{\bf{\alpha }}\] where $\hat u,\,{B_i}\,and\,{\alpha _i}$ stand for approx. function, coefficients and basis function, respectively. We minimize the sum of residuum squares, i.e., the sum of squares of difference between the approx. function and target function, in addition we can also add weight function that controls the significance of nodes, i.e., \[{r^2} = \sum\limits_j^n {W\left( {{{\bf{p}}_j}} \right){{\left( {u({{\bf{p}}_j}) - \hat u({{\bf{p}}_j})} \right)}^2}} = {\left( {{\bf{B\alpha }} - {\bf{u}}} \right)^{\rm{T}}}{\bf{W}}\left( {{\bf{B\alpha }} - {\bf{u}}} \right)\] Where $\bf{B}$ is non-square matrix of dimension $m \times n$ and $\bf{W}$ diagonal matrix of weights. The least squares problem can be solved with three approaches

- Normal equation (fastest – less accurate) – using Cholesky decomposition

- QR decomposition of $\bf{B}$ ($rank(\bf{B})=m$ – number of basis functions)

- SVD decomposition of $\bf{B}$ (more expensive – more reliable, no rank demand)

In MM we use SVD.

Example of trivial MLS is Kernel approximation, which is basically MLS with basis b=1. \[\begin{array}{l} \hat u({\bf{p}}) = {{\bf{b}}^{\rm{T}}}{\left( {{{\bf{W}}^{0.5}}{\bf{B}}} \right)^{ - 1}}{{\bf{W}}^{0.5}}{\bf{u}} = 1\left( {\frac{{{{\bf{W}}^{0.5\,}}^T}}[[:Template:\left\]]} \right\|}}} \right){{\bf{W}}^{0.5}}{\bf{u}} = \frac{{\sum {{W_n}{u_n}} }}{{\sum Template:W n }}\\ {\bf{b}} = 1,\,{\bf{W}} = \left[ {\begin{array}{*{20}{c}} {{{\rm{W}}_1}}&0&0\\ 0&{...}&0\\ 0&0&{{{\rm{W}}_n}} \end{array}} \right],{\bf{B}} = \left[ {\begin{array}{*{20}{c}} 1\\ 1\\ 1 \end{array}} \right] \end{array}\]] This approximation is also well-known as Shephard's method and it is widely used in SPH.

Normal equations

We seek the minimum of \[{\bf{r}} = {\bf{u}} - {\bf{B\alpha }}\], \[{r^2} = {\left( {{\bf{u}} - {\bf{B\alpha }}} \right)^{\rm{T}}}\left( {{\bf{u}} - {\bf{B\alpha }}} \right)\] By seeking the zero gradient in terms of coefficient \[\frac{\partial }{{\partial {\alpha _i}}}\left( {{{\left( {{\bf{u}} - {\bf{B\alpha }}} \right)}^{\rm{T}}}\left( {{\bf{u}} - {\bf{B\alpha }}} \right)} \right) = 0\] resulting in \[{\bf{B}}{{\bf{B}}^{\rm{T}}}{\bf{\alpha }} = {{\bf{B}}^{\rm{T}}}{\bf{u}}\] The coefficient matrix $\bf{B}^T\bf{B}$ is symmetric and positive definite. However, solving above problem directly is poorly behaved with respect to round-off effects since the condition number of $\bf{B}^T\bf{B}$is the square of that of $\bf{B}$. Or in case of weighted LS \[{\bf{WB}}{{\bf{B}}^{\rm{T}}}{\bf{\alpha }} = {\bf{W}}{{\bf{B}}^{\rm{T}}}{\bf{u}}\]

Complexity of Chol: $\frac{{{n}^{3}}}{3}$ complexity of matrix multiplication $n{{m}^{2}}$

QR Decomposition

\[{\bf{B}} = {\bf{QR}} = \left[ {{{\bf{Q}}_1},{{\bf{Q}}_2}} \right]\left[ {\begin{array}{*{20}{c}} {{{\bf{R}}_1}}\\ 0 \end{array}} \right]\] \[{\bf{B}} = {{\bf{Q}}_1}{{\bf{R}}_1}\] $\bf{Q}$ is unitary matrix ($\bf{Q}^{-1}=\bf{Q}^T$). Useful property of a unitary matrices is that multiplying with them does not alter the (Euclidean) norm of a vector, i.e., \[\left\| {{\bf{Qx}}} \right\|{\bf{ = }}\left\| {\bf{x}} \right\|\] And $\bf{R}$ is upper diagonal matrix \[{\bf{R = (}}{{\bf{R}}_{\bf{1}}}{\bf{,}}0{\bf{)}}\] therefore we can say \[\begin{array}{l} \left\| {{\bf{B\alpha }} - {\bf{u}}} \right\| = \left\| {{{\bf{Q}}^{\rm{T}}}\left( {{\bf{B\alpha }} - {\bf{u}}} \right)} \right\| = \left\| {{{\bf{Q}}^{\rm{T}}}{\bf{B\alpha }} - {{\bf{Q}}^{\rm{T}}}{\bf{u}}} \right\|\\ = \left\| {{{\bf{Q}}^{\rm{T}}}\left( {{\bf{QR}}} \right){\bf{\alpha }} - {{\bf{Q}}^{\rm{T}}}{\bf{u}}} \right\| = \left\| {\left( {{{\bf{R}}_1},0} \right){\bf{\alpha }} - {{\left( {{{\bf{Q}}_1},{{\bf{Q}}_{\bf{2}}}} \right)}^{\rm{T}}}{\bf{u}}} \right\|\\ = \left\| {{{\bf{R}}_{\bf{1}}}{\bf{\alpha }} - {{\bf{Q}}_{\bf{1}}}{\bf{u}}} \right\| + \left\| {{\bf{Q}}_2^{\rm{T}}{\bf{u}}} \right\| \end{array}\] Of the two terms on the right we have no control over the second, and we can render the first one zero by solving \[{{\bf{R}}_{\bf{1}}}{\bf{\alpha }} = {\bf{Q}}_{_{\bf{1}}}^{\rm{T}}{\bf{u}}\] Which results in a minimum. We could also compute it with pseudo inverse \[\mathbf{\alpha }={{\mathbf{B}}^{-1}}\mathbf{u}\] Where pseudo inverse is simply \[{{\mathbf{B}}^{-1}}=\mathbf{R}_{\text{1}}^{\text{-1}}{{\mathbf{Q}}^{\text{T}}}\] (once again, R is upper diagonal matrix, and Q is unitary matrix). And for weighted case \[\mathbf{\alpha }={{\left( {{\mathbf{W}}^{0.5}}\mathbf{B} \right)}^{-1}}\left( {{\mathbf{W}}^{0.5}}\mathbf{u} \right)\]

Complexity of QR decomposition \[\frac{2}{3}m{{n}^{2}}+{{n}^{2}}+\frac{1}{3}n-2=O({{n}^{3}})\]

SVD decomposition

In linear algebra, the singular value decomposition (SVD) is a factorization of a real or complex matrix. It has many useful applications in signal processing and statistics.

Formally, the singular value decomposition of an m × n real or complex matrix $\bf{B}$ is a factorization of the form $\bf{B}= \bf{UΣV}*$, where $\bf{U}$ is an m × m real or complex unitary matrix, $\bf{Σ}$ is an m × n rectangular diagonal matrix with non-negative real numbers on the diagonal, and $\bf{V*}$ is an n × n real or complex unitary matrix. The diagonal entries Σ_{i,i} of are known as the singular values of $\bf{B}$. The m columns of $\bf{U}$ and the n columns of $\bf{V}$ are called the left-singular vectors and right-singular vectors of $\bf{B}$, respectively.

The singular value decomposition and the eigen decomposition are closely related. Namely:

- The left-singular vectors of $\bf{B}$ are eigen vectors of $\bf{B*B^*}$.

- The right-singular vectors of $\bf{B}$ are eigen vectors of $\bf{B*B}$.

- The non-zero singular values of $\bf{B}$ (found on the diagonal entries of $\bf{Σ}$) are the square roots of the non-zero eigenvalues of both $\bf{B*B}$ and $\bf{B*B^*}$.

with SVD we can write B as \[\mathbf{B}=\mathbf{U}\sum {{\mathbf{V}}^{\text{T}}}\] where are U and V again unitary matrices and \[\sum \] stands for diagonal matrix of singular values.

Again we can solve either the system or compute pseudo inverse as \[{{\mathbf{B}}^{-1}}={{\left( \mathbf{U}\sum {{\mathbf{V}}^{\text{T}}} \right)}^{-1}}=\mathbf{V}{{\sum }^{-1}}{{\mathbf{U}}^{\text{T}}}\], where $\bf{Σ}$^-1 is trivial, just replace every non-zero diagonal entry by its reciprocal and transpose the resulting matrix. The stability gain is exactly here, one can now set threshold below which the singular value is considered as 0, basically truncate all singular values below some value and thus stabilize the inverse.

SVD decomposition complexity \[2m{{n}^{2}}~+\text{ }2{{n}^{3}}=O({{n}^{3}})\]