Difference between revisions of "K-d tree"

| Line 4: | Line 4: | ||

However, in meshless methods, the stencil/support nodes are usually chosen as the nearest $n$ visible nodes. | However, in meshless methods, the stencil/support nodes are usually chosen as the nearest $n$ visible nodes. | ||

| − | In order to compute nearest neighbors effectively, fast spatial search structures have been developed, such as [https://en.wikipedia.org/wiki/Octree octree], [https://en.wikipedia.org/wiki/Ball_tree ball tree] and [https://en.wikipedia.org/wiki/K-d_tree $k$-d tree]. All of these structures first take a list of $N$ points and build a search structure, enabling subsequent queries for $n$ nearest neighbors to be answered in $O(n\log N)$ time. | + | In order to compute nearest neighbors effectively, fast spatial search structures have been developed, such as [https://en.wikipedia.org/wiki/Octree octree], [https://en.wikipedia.org/wiki/Ball_tree ball tree], [https://en.wikipedia.org/wiki/Cover_tree cover tree] and [https://en.wikipedia.org/wiki/K-d_tree $k$-d tree]. All of these structures first take a list of $N$ points and build a search structure, enabling subsequent queries for $n$ nearest neighbors to be answered in $O(n\log N)$ time. |

The $k$-d tree structure is describe below to give reader a basic idea of how such spatial structures store data. | The $k$-d tree structure is describe below to give reader a basic idea of how such spatial structures store data. | ||

| Line 12: | Line 12: | ||

A $k$-d tree is a binary tree which uses hyperplane space partitioning to divide points into subgroups (subtrees). The plane is chosen based on the depth of the node in a tree. At depth $k$, the $k$-th axis (dimenison) is used to divide the remaining points. Example: in 3 dimensions we have $x$, $y$ and $z$ axes. At root level we decide to divide points according to their $x$ values (we could also do it according to $y$ or $z$ values). Usual criterion for division of points is the median. Thus, at root level, we look for median point by looking at $x$ coordiantes of all points. We put the median point into the root leaf. Remaining points are put into the left and right subtrees. We put the points which have smaller $x$ coorinate into the left subtree and points which have larger $x$ coordinate into the right subtree (or vice versa). We construct the two children nodes of the root from the two subgroups we just created. The procedure is the same, except that we divide the space according to $y$ or $z$ coordinate. We continue this way until we run out of points. The axes used for median search are periodically repeating. | A $k$-d tree is a binary tree which uses hyperplane space partitioning to divide points into subgroups (subtrees). The plane is chosen based on the depth of the node in a tree. At depth $k$, the $k$-th axis (dimenison) is used to divide the remaining points. Example: in 3 dimensions we have $x$, $y$ and $z$ axes. At root level we decide to divide points according to their $x$ values (we could also do it according to $y$ or $z$ values). Usual criterion for division of points is the median. Thus, at root level, we look for median point by looking at $x$ coordiantes of all points. We put the median point into the root leaf. Remaining points are put into the left and right subtrees. We put the points which have smaller $x$ coorinate into the left subtree and points which have larger $x$ coordinate into the right subtree (or vice versa). We construct the two children nodes of the root from the two subgroups we just created. The procedure is the same, except that we divide the space according to $y$ or $z$ coordinate. We continue this way until we run out of points. The axes used for median search are periodically repeating. | ||

| + | Often, dynamic search structures are needed as well, which support node insertion and removal. In the beginning we were using [http://www.cs.umd.edu/~mount/ANN/ ANN] and [https://www.savarese.com/software/libssrckdtree/ libssrckdtree] | ||

| + | libraries for static and dynamic spatial search structures, respectively. Later, we tested four different publicly available C++ libraries: | ||

| + | * [http://www.cs.umd.edu/~mount/ANN/ ANN] | ||

| + | * [https://www.savarese.com/software/libssrckdtree/ libssrckdtree] | ||

| + | * [http://spatial.sourceforge.net/ spatial] | ||

| + | * [https://github.com/jlblancoc/nanoflann/ nanoflann] | ||

| − | + | We tested a typical example of finding support domains of 9 nodes for all nodes, which includes building a tree from a set of points and finding $n$ closest nodes for each point. | |

| + | The results are shown below. | ||

| − | + | [[File:Tree_building_time.jpg]] | |

| − | |||

Nearest neighbor search is a $log n$ procedure, although in practice, especially in higher dimensions, it's closer to o(n). There is a nice description of the algorithm on [https://en.wikipedia.org/wiki/K-d_tree wikipedia] website. We tested ANN and Libssrckdtree implementations. In addition we tested also cover tree nearest neighbor search. | Nearest neighbor search is a $log n$ procedure, although in practice, especially in higher dimensions, it's closer to o(n). There is a nice description of the algorithm on [https://en.wikipedia.org/wiki/K-d_tree wikipedia] website. We tested ANN and Libssrckdtree implementations. In addition we tested also cover tree nearest neighbor search. | ||

Revision as of 13:31, 19 November 2018

In structured meshes, neighborhood relations are implicitly determined by the mapping from the physical to the logical space. In unstructured mesh based approaches, support domains can be determined from the mesh connectivity relations. However, in meshless methods, the stencil/support nodes are usually chosen as the nearest $n$ visible nodes.

In order to compute nearest neighbors effectively, fast spatial search structures have been developed, such as octree, ball tree, cover tree and $k$-d tree. All of these structures first take a list of $N$ points and build a search structure, enabling subsequent queries for $n$ nearest neighbors to be answered in $O(n\log N)$ time. The $k$-d tree structure is describe below to give reader a basic idea of how such spatial structures store data.

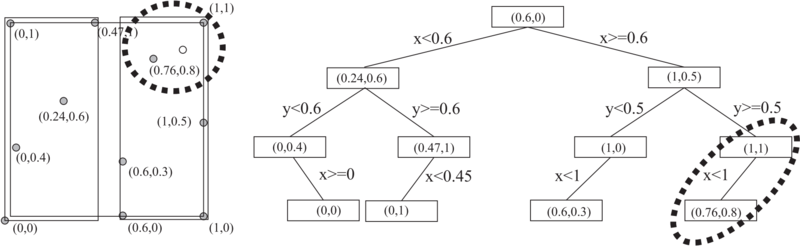

A $k$-d tree is a binary tree which uses hyperplane space partitioning to divide points into subgroups (subtrees). The plane is chosen based on the depth of the node in a tree. At depth $k$, the $k$-th axis (dimenison) is used to divide the remaining points. Example: in 3 dimensions we have $x$, $y$ and $z$ axes. At root level we decide to divide points according to their $x$ values (we could also do it according to $y$ or $z$ values). Usual criterion for division of points is the median. Thus, at root level, we look for median point by looking at $x$ coordiantes of all points. We put the median point into the root leaf. Remaining points are put into the left and right subtrees. We put the points which have smaller $x$ coorinate into the left subtree and points which have larger $x$ coordinate into the right subtree (or vice versa). We construct the two children nodes of the root from the two subgroups we just created. The procedure is the same, except that we divide the space according to $y$ or $z$ coordinate. We continue this way until we run out of points. The axes used for median search are periodically repeating.

Often, dynamic search structures are needed as well, which support node insertion and removal. In the beginning we were using ANN and libssrckdtree libraries for static and dynamic spatial search structures, respectively. Later, we tested four different publicly available C++ libraries:

* ANN * libssrckdtree * spatial * nanoflann

We tested a typical example of finding support domains of 9 nodes for all nodes, which includes building a tree from a set of points and finding $n$ closest nodes for each point. The results are shown below.

Nearest neighbor search is a $log n$ procedure, although in practice, especially in higher dimensions, it's closer to o(n). There is a nice description of the algorithm on wikipedia website. We tested ANN and Libssrckdtree implementations. In addition we tested also cover tree nearest neighbor search.

Note that cover tree implementation is not the best available, but it is the only one to have insert/remove and is written in c++.

References

- Trobec R., Kosec G., Parallel scientific computing : theory, algorithms, and applications of mesh based and meshless methods: Springer; 2015.

- k-d tree. (n.d.). In Wikipedia. Retrieved March 17, 2017, from https://en.wikipedia.org/wiki/K-d_tree