Difference between revisions of "Execution on Intel® Xeon Phi™ co-processor"

(→Speedup by vectorization) |

(→Speedup by vectorization) |

||

| Line 76: | Line 76: | ||

Intel Xeon Phi has a 512 bit of space for simultaneous computation, which means it can calculate 8 double (or 16 single) operations at the same time. This is called vectorization and greatly improves code execution. | Intel Xeon Phi has a 512 bit of space for simultaneous computation, which means it can calculate 8 double (or 16 single) operations at the same time. This is called vectorization and greatly improves code execution. | ||

| − | Consider the following code: | + | Consider the following code of speedtest.cpp: |

| + | <syntaxhighlight lang="c++" line> | ||

| + | #include <cmath> | ||

| + | #include <iostream> | ||

| + | |||

| + | int main() { | ||

| + | const int N = 104; | ||

| + | double a[N]; | ||

| + | for (int i = 0; i < 1e5; i++) | ||

| + | for (int j = 0; j < N; j++) | ||

| + | a[j] = std::sin(std::exp(a[j]-j)*3 * i + i*j); | ||

| + | std::cout << a[4] << "\n"; | ||

| + | return 0; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | Intel's C++ compiler ICPC will successfully vectorize the inner for loop, so that it will run significantly faster than with vectorization disabled. | ||

| + | |||

| + | The code can be compiled with or without vectorization | ||

| + | <syntaxhighlight lang="bash"> | ||

| + | $ icpc speedtest.cpp -o vectorized_speedtest -O3 | ||

| + | $ icpc speedtest.cpp -o unvectorized_speedtest -O3 -no-vec | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Machine | ||

| + | | ASUS ZenBook Pro UX501VW | ||

| + | | Intel® Xeon® CPU E5-2620 v3 | ||

| + | | Intel® Xeon® CPU E5-2620 v3 | ||

| + | | Intel® Xeon® CPU E5-2620 v3 | ||

| + | | Intel® Xeon Phi™ Coprocessor SE10/7120 | ||

| + | | Intel® Xeon Phi™ Coprocessor SE10/7120 | ||

| + | |- | ||

| + | ! Compiler | ||

| + | | g++-6.3.1 | ||

| + | | g++-4.8.5 | ||

| + | | icpc-16.0.1 | ||

| + | | icpc-16.0.1 -no-vec | ||

| + | | icpc-16.0.1 | ||

| + | | icpc-16.0.1 -no-vec | ||

| + | |- | ||

| + | ! Execution time[s] | ||

| + | | 0.63 - 0.66 | ||

| + | | 0.65 - 0.66 | ||

| + | | 0.155 - 0.160 | ||

| + | | 0.50 - 0.51 | ||

| + | | 0.25 - 0.26 | ||

| + | | 11.1 - 11.2 | ||

| + | |} | ||

| + | |||

| + | ''' Code incapable of vectorization ''' | ||

| + | |||

| + | On the other hand there is a very similar code that can not be vectorized. | ||

| + | |||

| + | <syntaxhighlight lang="c++" line> | ||

| + | #include <cmath> | ||

| + | #include <iostream> | ||

| + | |||

| + | int main() { | ||

| + | const int N = 104; | ||

| + | double a; | ||

| + | for (int i = 0; i < 1e5; i++) | ||

| + | for (int j = 0; j < N; j++) | ||

| + | a = std::sin(std::exp(a-j)*3 * i + i*j); | ||

| + | std::cout << a << "\n"; | ||

| + | return 0; | ||

| + | } | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Machine | ||

| + | | ASUS ZenBook Pro UX501VW | ||

| + | | Intel® Xeon® CPU E5-2620 v3 | ||

| + | | Intel® Xeon® CPU E5-2620 v3 | ||

| + | | Intel® Xeon® CPU E5-2620 v3 | ||

| + | | Intel® Xeon Phi™ Coprocessor SE10/7120 | ||

| + | | Intel® Xeon Phi™ Coprocessor SE10/7120 | ||

| + | |- | ||

| + | ! Compiler | ||

| + | | g++-6.3.1 | ||

| + | | g++-4.8.5 | ||

| + | | icpc-16.0.1 | ||

| + | | icpc-16.0.1 -no-vec | ||

| + | | icpc-16.0.1 | ||

| + | | icpc-16.0.1 -no-vec | ||

| + | |- | ||

| + | ! Execution time[s] | ||

| + | | 0.80 - 0.82 | ||

| + | | 0.72 - 0.73 | ||

| + | | 0.58 - 0.59 | ||

| + | | 0.58 - 0.59 | ||

| + | | 10.9 - 11.0 | ||

| + | | 10.9 - 11.0 | ||

| + | |} | ||

Revision as of 19:21, 1 March 2017

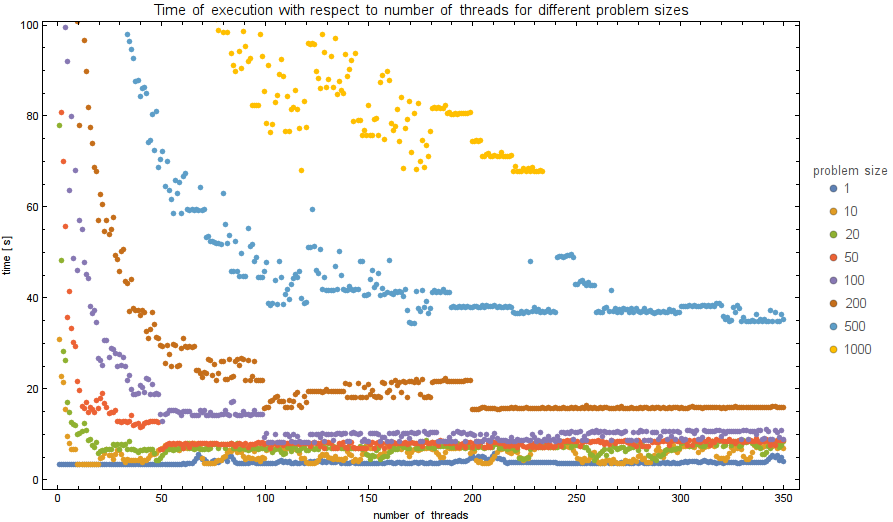

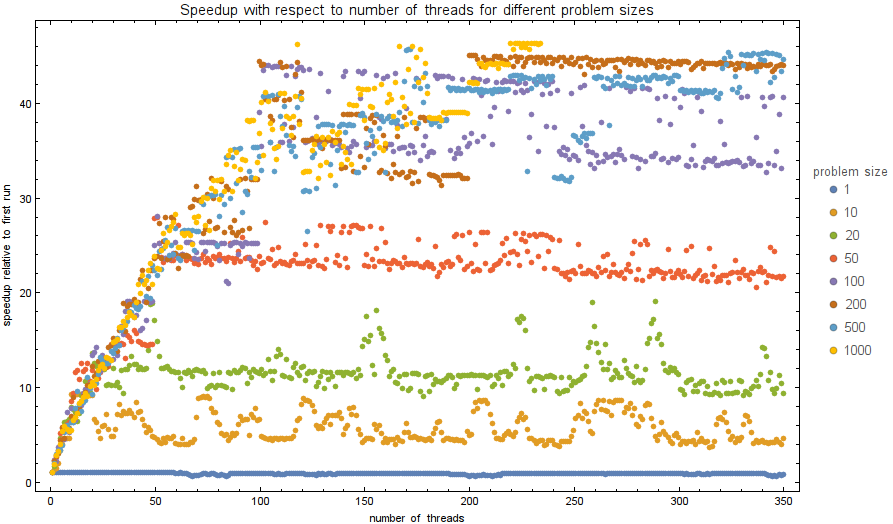

Speedup by parallelization

We tested the speedups on the Intel® Xeon Phi™ with the following code:

1 #include <stdio.h>

2 #include <stdlib.h>

3 #include <string.h>

4 #include <assert.h>

5 #include <omp.h>

6 #include <math.h>

7

8 int main(int argc, char *argv[]) {

9 int numthreads;

10 int n;

11

12 assert(argc == 3 && "args: numthreads n");

13 sscanf(argv[1], "%d", &numthreads);

14 sscanf(argv[2], "%d", &n);

15

16 printf("Init...\n");

17 printf("Start (%d threads)...\n", numthreads);

18 printf("%d test cases\n", n);

19

20 int m = 1000000;

21 double ttime = omp_get_wtime();

22

23 int i;

24 double d = 0;

25 #pragma offload target(mic:0)

26 {

27 #pragma omp parallel for private (i) schedule(static) num_threads(numthreads)

28 for(i = 0; i < n; ++i) {

29 for(int j = 0; j < m; ++j) {

30 d = sin(d) + 0.1 + j;

31 d = pow(0.2, d)*j;

32 }

33 }

34 }

35 double time = omp_get_wtime() - ttime;

36 fprintf(stderr, "%d %d %.6f\n", n, numthreads, time);

37 printf("time: %.6f s\n", time);

38 printf("Done d = %.6lf.\n", d);

39

40 return 0;

41 }

The code essentially distributes a problem of size $n\cdot m$ among numthreads cores,

We tested the time of execution for $n$ from the set $\{1, 10, 20, 50, 100, 200, 500, 1000\}$

and numthreads from $1$ to $350$. The plots of exectuion times and performance speeups are shown below.

The code was compiled using:

icc -openmp -O3 -qopt-report=2 -qopt-report-phase=vec -o test test.cpp

without warnings or errors. Then, in order to offload to Intel Phi, user must be logged in as root:

sudo su

To run correctly, intel compiler and runtime variables must be sourced:

source /opt/intel/bin/compilervars.sh intel64

Finally, the code was tested using the following command, where test is the name of the compiled executable:

for n in 1 10 20 50 100 200 500 1000; do for nt in {1..350}; echo $nt $n; ./test $nt $n 2>> speedups.txt; done; done

Speedup by vectorization

Intel Xeon Phi has a 512 bit of space for simultaneous computation, which means it can calculate 8 double (or 16 single) operations at the same time. This is called vectorization and greatly improves code execution.

Consider the following code of speedtest.cpp:

1 #include <cmath>

2 #include <iostream>

3

4 int main() {

5 const int N = 104;

6 double a[N];

7 for (int i = 0; i < 1e5; i++)

8 for (int j = 0; j < N; j++)

9 a[j] = std::sin(std::exp(a[j]-j)*3 * i + i*j);

10 std::cout << a[4] << "\n";

11 return 0;

12 }

Intel's C++ compiler ICPC will successfully vectorize the inner for loop, so that it will run significantly faster than with vectorization disabled.

The code can be compiled with or without vectorization

$ icpc speedtest.cpp -o vectorized_speedtest -O3

$ icpc speedtest.cpp -o unvectorized_speedtest -O3 -no-vec

| Machine | ASUS ZenBook Pro UX501VW | Intel® Xeon® CPU E5-2620 v3 | Intel® Xeon® CPU E5-2620 v3 | Intel® Xeon® CPU E5-2620 v3 | Intel® Xeon Phi™ Coprocessor SE10/7120 | Intel® Xeon Phi™ Coprocessor SE10/7120 |

|---|---|---|---|---|---|---|

| Compiler | g++-6.3.1 | g++-4.8.5 | icpc-16.0.1 | icpc-16.0.1 -no-vec | icpc-16.0.1 | icpc-16.0.1 -no-vec |

| Execution time[s] | 0.63 - 0.66 | 0.65 - 0.66 | 0.155 - 0.160 | 0.50 - 0.51 | 0.25 - 0.26 | 11.1 - 11.2 |

Code incapable of vectorization

On the other hand there is a very similar code that can not be vectorized.

1 #include <cmath>

2 #include <iostream>

3

4 int main() {

5 const int N = 104;

6 double a;

7 for (int i = 0; i < 1e5; i++)

8 for (int j = 0; j < N; j++)

9 a = std::sin(std::exp(a-j)*3 * i + i*j);

10 std::cout << a << "\n";

11 return 0;

12 }

| Machine | ASUS ZenBook Pro UX501VW | Intel® Xeon® CPU E5-2620 v3 | Intel® Xeon® CPU E5-2620 v3 | Intel® Xeon® CPU E5-2620 v3 | Intel® Xeon Phi™ Coprocessor SE10/7120 | Intel® Xeon Phi™ Coprocessor SE10/7120 |

|---|---|---|---|---|---|---|

| Compiler | g++-6.3.1 | g++-4.8.5 | icpc-16.0.1 | icpc-16.0.1 -no-vec | icpc-16.0.1 | icpc-16.0.1 -no-vec |

| Execution time[s] | 0.80 - 0.82 | 0.72 - 0.73 | 0.58 - 0.59 | 0.58 - 0.59 | 10.9 - 11.0 | 10.9 - 11.0 |