Difference between revisions of "Testing"

| Line 1: | Line 1: | ||

| − | {{ | + | {{Info|This page is meant for Medusa developers.}} |

<figure id="fig:tests"> | <figure id="fig:tests"> | ||

Revision as of 14:27, 17 January 2019

Info: This page is meant for Medusa developers.

We have 4 different kind of tests in this library:

- unit tests

- style checks

- documentation checks

- examples

The ./run_tests.sh script controlls all tests

usage: run_tests.py [-h] [-c] [-b] [-t] [--build-examples] [-e] [-s] [-d]

Script to check you medusa library.

optional arguments:

-h, --help show this help message and exit

-c, --configure check your system configuration

-b, --build build the library

-t, --tests run all tests

--build-examples build all examples

-e, --run-examples run selected examples

-s, --style style checks all sources and reports any errors

-d, --docs generate and check for documentation errors

Before pushing, always run ./run_tests.py.

This script compiles and executes all tests, checks coding style, documentation and examples.

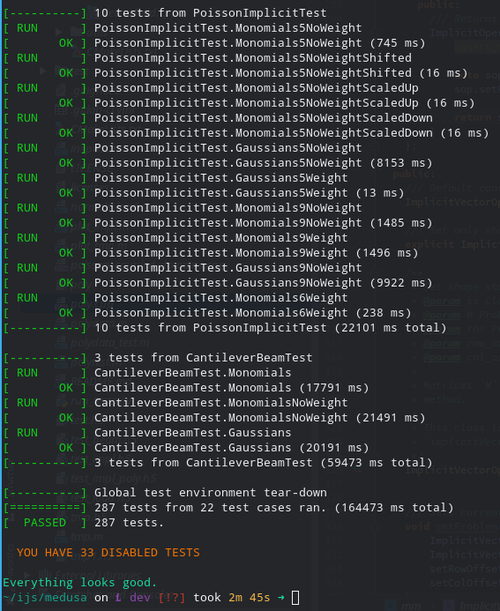

If anything is wrong you will get pretty little red error, but if you see green, like in Figure 1, you're good to go.

Contents

Unit tests

All library code is tested by means of unit tests. Unit tests provide verification, are good examples and prevent regressions. For any newly added functionality, a unit test testing that functionality must be added.

Writing unit tests

Every new functionality (eg. added class, function or method) should have a unit test. Unit tests

- assure that code compiles

- assure that code executes without crashes

- assure that code produces expected results

- define observable behaviour of the method, class, ...

- prevent future modifications of this code to change this behaviour accidentally

Unit tests should tests observable behaviour, eg. if function gets 1 and 3 as input, output should be 6. They should test for edge cases and most common cases, as well as for expected death cases.

We are using Google Test framework for our unit tests. See their introduction to unit testing for more details.

The basic structure is

TEST(Group, Name) {

EXPECT_EQ(a, b);

}

Each header file should be accompanied by a <util_name>_test.cpp with unit tests.

When writing unit tests, always write them thoroughly and slowly, take your time. Never copy your own code's output to the test; rather produce it by hand or with another trusted tool. Even if it seems obvious the code is correct, remember that you are writing tests also for the future. If tests have a bug, it is much harder to debug!

See our examples in technical documentation.

Running unit tests

Tests can be run all at once via make medusa_run_tests or individually via eg. make operators_run_tests.

Compiled binary supports running only specified test. Use ./bin/medusa_tests --gtest_filter=Domain* or

simply GF="Domain" make domain_run_tests for filtering and ./bin/medusa_tests --help for more options.

Fixing bugs

When you find a bug in the normal code, fix it and write a test for it. The test should fail before the fix, and pass after the it.

Style check

Before committing, a linter cpplint.py is ran on all the source and test files to make sure that the code follows the style guide.

The linter is not perfect, so if any errors are unjust, feel free to comment appropriate lines in the linter out and commit the change.

Docs check

Every function, class or method should also have documentation as enforced by doxygen in the header where they are defined. In the comment block, all parameters, and return value should be meaningfully described. It can also containing a short example.

Example:

/**

* Computes the force that point a inflicts on point b.

*

* @param a The index of the first point.

* @param b The index of the second point

* @return The size of the force vector from a to b.

*/

double f (int a, int b);

Any longer discussions about the method used or long examples belong to this wiki, but can be linked from the docs.

Continuous build

We use GitLab Runner, an open source project that is used to run your jobs and send the results back to GitLab. It is used in conjunction with GitLab CI, the open-source continuous integration service included with GitLab that coordinates the jobs.

To configure runner that perform MM test on each commit edit .gitlab-ci.yml in repository.